Can Claude help you cheat your way through a Coding Interview?

Date: 10th October 2024

A reddit user shares their experience of automating Leetcode problem-solving using Claude’s 3.5 Sonnet API and Python. A script was created that autonomously solved 633 Leetcode problems in a 24-hour period with an impressive 86% success rate, while the API usage cost them only $9. The project was more of an experiment to test the capability of AI in solving Leetcode problems autonomously, rather than focusing on the speed of solving them. The first step involved scraping the problem description from the Leetcode webpage to pass it to the Claude API for solution generation. Selenium was used for real-time interaction with Leetcode’s web elements.

Web scraping using Selenium and BeautifulSoup, OpenAI API, python

Key Features and Insights:

-

The script was designed to analyse test case results and detect failures based on text strings. Upon encountering a failed test case, it would retry the problem, using feedback from the failure to refine its solution. The AI had a limit of four total attempts per problem (one initial try, plus three retries) before giving up.

-

The user was particularly interested in observing how the script handled failed test cases, especially because the models may have been trained on most Leetcode problems. This raised questions about how the AI would handle problems it had potentially never seen before or any new problems added to Leetcode since the model’s training data was compiled. The focus was on understanding whether the AI could solve problems autonomously and deal with new challenges it wasn’t specifically trained to handle, showing adaptability to new content.

-

After experimenting with multiple models, including Google’s Gemini and OpenAI’s API, the Claude 3.5 was preferred due to its strict adherence to structured prompts. Claude consistently followed instructions, especially when the user required responses in specific formats, such as code-only or nested JSON outputs.

-

The script was built step-by-step, adding each function as the need arose. The initial challenge was to extract the problem description from the webpage using Selenium. Once the description was extracted, they sent it to the Claude API for code generation.

-

The tools used for this automation included:

-

Selenium WebDriver for interacting with the webpage.

-

BeautifulSoup4 for parsing HTML elements.

-

undetected_chromedriver to avoid detection by Leetcode’s anti-bot mechanisms.

-

Undetected_chromedriver is a specialised version of Selenium’s Chrome WebDriver, designed to bypass detection by any anti-bot or anti-scraping mechanisms that websites like Leetcode implement. In the context of automating Leetcode with Claude, using undetected_chromedriver allows the script to interact with the Leetcode website as if it were a regular user, bypassing these checks. By using this tool, the user avoided detection, ensuring that the automation process could run continuously and solve hundreds of problems in a 24-hour period without being flagged or blocked by Leetcode. Without undetected_chromedriver, there’s a higher likelihood that the script would have been blocked or limited by Leetcode’s bot detection systems

-

The automation process involves a continuous loop of problem-solving. The loop can be broken down into details as follows:

-

Script begins by accessing the list of available problems, using Selenium WebDriver, navigating through the problem set.

-

It then identifies an unsolved problem that hasn’t been attempted in previous runs.

-

Once the problem has been selected, the script scrapes the problem definition, which includes the problem statement, input/output format, and constraints.

-

After the problem description is successfully extracted, the script sends it to Claude’s 3.5 Sonnet API. The API processes the problem and generates a solution in the form of executable code.

-

Once the API returns the solution, the script submits the code to Leetcode’s built-in code editor for execution. The code is automatically tested against the predefined test cases.

-

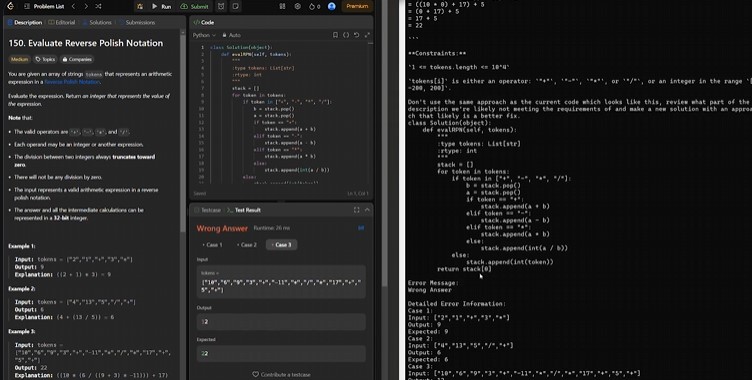

If the code fails one or more test cases, the script enters the retry phase. The failed cases are crucial feedback for Claude’s API. The script re-sends the problem, this time providing additional context from the failed test cases to the API to improve the solution. This iterative process continues for up to four attempts per problem: one initial attempt and three retries. Each retry is based on the feedback from the failed test cases.

-

If the problem was successfully solved, or if the allowed retries are exhausted, the script returns to the main problem list and marks them completed.

-

The script then automatically searches for the next unsolved and non-premium problem and repeats the entire process

-

The script solved problems across all difficulty levels:

-

217 easy problems

-

359 medium problems

-

57 hard problems

-

Although the user did not track success rates based on problem difficulty, they noted that the overall success rate was 86%.

Comparisons and Challenges Faced:

-

The user had initially tried Google’s Gemini and OpenAI’s models, but found them lacking in structure, especially when it came to adhering to formatting instructions. They praised Claude for its disciplined approach and remarked that OpenAI’s earlier models had a tendency to mix explanations with code, which caused issues when copying solutions.

-

The user mentioned that Leetcode’s web structure is deeply nested, with elements hidden within 20 layers of HTML/CSS/JavaScript, making it difficult to identify relevant information (e.g., problem URLs, whether a problem is premium or not). However they overcame these using Selenium for interaction and BeautifulSoup4 for parsing. Additionally, undetected_ chromedriver was used to avoid being detected and blocked by Leetcode’s anti-bot measures, ensuring the script could function without interruptions.

Conclusion:

This Reddit post demonstrates the potential of using AI to autonomously solve coding problems, specifically on competitive programming platforms like Leetcode. The project highlights the capability of Claude’s 3.5 Sonnet API to solve a large number of problems efficiently, even when encountering test cases that were possibly outside the scope of its training data. Additionally, it shows how combining AI with automation tools like Selenium can create systems that not only solve problems but do so autonomously without human intervention.

The experiment raises important questions about the future of AI in software development, particularly in areas like competitive programming, where speed, accuracy, and adaptability are crucial. It also sheds light on the potential advantages and limitations of different AI models, especially when it comes to following structured prompts and handling new, unseen challenges.