Exploring ZHIPU's GLM4 9B for RAG Workflows

Date: 13th October 2024

ZHIPU’s GLM-4-9B-Chat, which is being highlighted for its performance in retrieval-augmented generation (RAG) tasks, especially in scenarios where minimising hallucination is critical. ZHIPU’s GLM-4-9B-Chat is gaining recognition for its impressive performance in tasks where accurate and reliable information generation is crucial

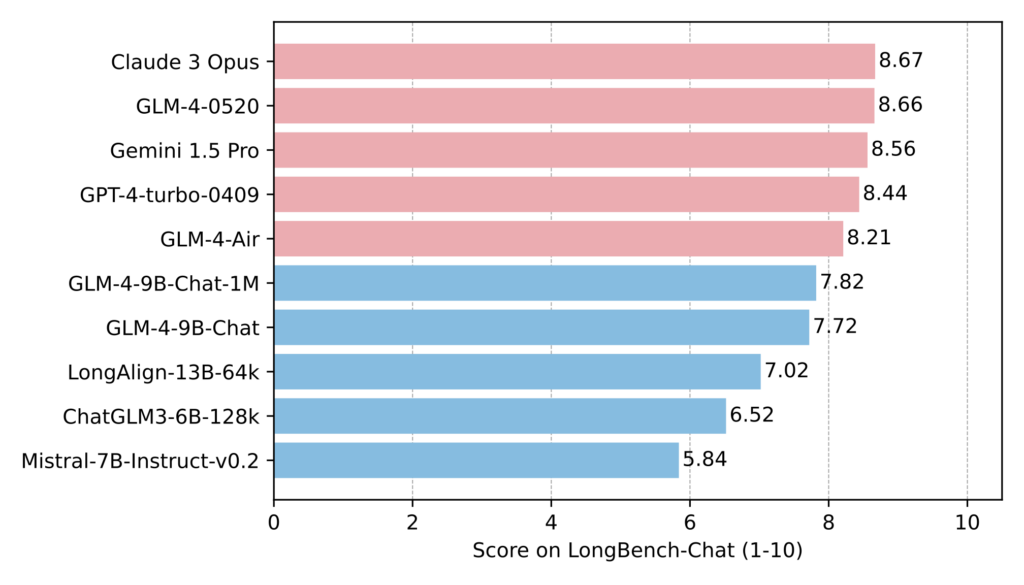

ZHIPU’s GLM-4-9B-Chat has emerged as a top-performing language model, especially for Retrieval-Augmented Generation (RAG) tasks that prioritise minimising hallucinations. The model’s rise to prominence has been recent, with many users. Its appearance in the Ollama models list and as a base model for Longwriter LLM, a language model known for producing high-quality long-form content, brought it into the spotlight.

- The “Ollama models list” is a collection of AI language models that can be accessed and used through the Ollama platform. Ollama is an interface for managing and running different language models, providing users with the ability to deploy, customise, and utilise various AI models for tasks such as text generation, language understanding, and other natural language processing applications. The list includes models with different capabilities, enabling users to choose the one that best fits their needs.

According to the Hughes Hallucination Evaluation Metrics (HHEM), GLM-4-9B-Chat has a hallucination rate of only 1.3%, paired with a factual correctness rate of 98.7%. These metrics suggest that the model is highly reliable when it comes to generating accurate and fact-based content, which is critical for RAG tasks where the integration of external knowledge is essential.

- The Hughes Hallucination Evaluation Metrics (HHEM) is a benchmark used to evaluate language models based on their tendency to produce hallucinations—incorrect or fabricated information—in generated text. It helps compare models’ reliability, especially for tasks where minimising errors and ensuring factual accuracy are important, such as in Retrieval-Augmented Generation (RAG).

- The metrics measure the hallucination rate (percentage of responses that include false information) and factual correctness rate (percentage of responses that are accurate).

- Hallucinations refer to instances where a language model generates information that is either inaccurate, fabricated or not grounded in the provided data. This typically occurs when the model misinterprets the input or doesn’t have access to the full, correct context, leading it to “guess” or fabricate details in its output.

Previously, it was considered that Command-R was the leading model for RAG. However, Command-R’s hallucination rate of 4.9%, while still relatively low, does not match the impressive 1.3% rate achieved by GLM-4-9B-Chat. The significant difference in performance led the user to reconsider GLM-4-9B-Chat as the new go-to choice for RAG applications.

Contextual Analysis

RAG tasks require language models to retrieve relevant information from an external database or source and then integrate this data into a coherent response. The challenge lies in accurately merging retrieved facts with generated language while avoiding hallucinations, which can occur when a model invents information. GLM-4-9B-Chat’s position as the top model for hallucination reduction suggests that it has a lower tendency to produce such errors compared to other models.

The growing interest in the GLM-4-9B-Chat model may stem from its practical applications in fields like content creation, customer support, and research, where factual accuracy is crucial. Being recognized on the Hughes Hallucination Eval Leaderboard indicates that GLM-4-9B-Chat can be more reliable for users who need accurate responses without fabricated details.

Technical Implementation and Usage

The user elaborates on their technical setup, detailing the use of an A100-enabled Azure virtual machine for running GLM-4-9B-Chat with FP16 precision. The model operates within a 64K context window, although it could potentially be extended to 128K. At 64K context, the model consumes approximately 64GB of VRAM, with an additional 900MB required for the embedding model.

- An A100-enabled Azure virtual machine is a cloud-based server provided by Microsoft Azure, equipped with NVIDIA A100 GPUs, which are powerful graphics processing units designed for tasks like machine learning and deep learning. These GPUs offer high computational power, making them ideal for running large language models efficiently.

- FP16 precision refers to using 16-bit floating-point numbers (half precision) for calculations instead of the standard 32-bit (FP32). This reduces memory usage and speeds up processing while still maintaining an acceptable level of accuracy in model performance.

For optimal RAG performance, the user pairs GLM-4-9B-Chat with “Nomic-embed-large” as the embedding model and utilises ChromaDB as the vector database.

This setup involves using multiple tools to optimise the performance of the GLM-4-9B-Chat language model for Retrieval-Augmented Generation (RAG) tasks:

- GLM-4-9B-Chat: This language model generates responses to user queries. For RAG, it needs to integrate information retrieved from external sources into its responses.

- Nomic-embed-large: An embedding model that converts text data into numerical vectors (representations of the text’s meaning). These vectors help the system understand the relationships between different pieces of text, making it easier to find relevant information.

- ChromaDB: A vector database used to store and manage these numerical vectors. It enables fast searching and retrieval of information by finding vectors (text) that are most similar to the query.

This setup facilitates near-instantaneous responses to RAG prompts, typically within 5-7 seconds, processing about 51.73 response tokens per second. The knowledge base consists of approximately 200 dense and complex PDFs, ranging in size from 100K to 5MB. The backend infrastructure leverages the Ollama backend, and the front-end interface is Open WebUI, making the overall workflow efficient and user-friendly.

Markdown Formatting and Response Quality

A notable strength of GLM-4-9B-Chat is its handling of Markdown formatting in responses. The user mentions that the model’s use of Markdown is among the best they have encountered, suggesting that it enhances the readability and presentation of generated content. The model’s ability to provide well-structured responses with appropriate formatting adds to its appeal for use cases that require professional and clear communication.

- Markdown formatting is a lightweight markup language used to style text. It allows for adding elements like headings, bold or italic text, lists, links, and code blocks, making the text more readable and visually structured. When a language model uses Markdown formatting in its responses, it enhances the presentation by organising the output with formatting elements such as bullet points, numbered lists, or highlighted sections. This makes the response clearer, more engaging, and easier to understand, especially for tasks that require structured information or documentation.

Community Comparisons and Alternatives

Some users share their experiences with other models, such as Gemma 2-2B, noting that while GLM-4-9B-Chat performs well, it is roughly on par with smaller models like Gemma 2-2B in some cases.

- Gemma 2-2B is a language model with 2 billion parameters, designed for natural language processing tasks. It is smaller and less resource-intensive compared to larger models, making it suitable for applications where computational efficiency is important.

One user tested the GLM-4-9B-Chat using the Q6_K GGUF model and found the answers to be generally satisfactory. However, they also acknowledged that differences in quality between models of varying sizes do exist, suggesting that while GLM-4-9B-Chat has a significant edge in accuracy, the gap may not be as wide in all use cases.

- The Q6_K GGUF model is a smaller, more efficient version of a language model designed to save space and make it easier to run on computers with less power. Q6_K means that the model has been simplified so that it uses fewer bits (like reducing the size of a file) to store its information. This makes it quicker to use and takes up less memory.

Analysis

The post provides insight into how GLM-4-9B-Chat’s low hallucination rate can significantly impact the quality of RAG tasks, especially when used for knowledge-intensive applications. The technical details about the configuration and the setup highlight how users can effectively deploy the model for high-performance RAG tasks. Additionally, the emphasis on Markdown formatting suggests that even seemingly small features can influence a model’s overall utility and user satisfaction.

The discussion underscores the importance of selecting appropriate chunking strategies for RAG tasks, as the choice of chunk size and overlap can significantly impact the quality of retrieved information. The comparisons to smaller models like Gemma 2-2B suggest that while GLM-4-9B-Chat is highly effective, smaller models can still compete in terms of quality for certain tasks. This may prompt further exploration into optimising models for specific use cases.

Conclusion

Overall, ZHIPU’s GLM-4-9B-Chat stands out in the RAG domain due to its low hallucination rate, efficient processing capabilities, and user-friendly features like Markdown formatting. It has quickly gained traction as a reliable option for those requiring accurate and well-formatted responses in complex data-driven tasks.